An article about computational results is advertising, not scholarship. The actual scholarship is the full software environment, code and data, that produced the result. —Claerbout and Karrenbach (1992)

[Link to Claerbout and Karrenbach (1992) article]

Pre-lecture materials

Read ahead

Before class, you can prepare by reading the following materials:

Statistical programming, Small mistakes, big impacts by Simon Schwab and Leonhard Held

Reproducible Research: A Retrospective by Roger Peng and Stephanie Hicks

Acknowledgements

Material for this lecture was borrowed and adopted from

- https://ropensci.github.io/reproducibility-guide/sections/introduction/

- https://rdpeng.github.io/Biostat776/

- Reproducible Research: A Retrospective by Roger Peng and Stephanie Hicks

Learning objectives

At the end of this lesson you will:

- Know the difference between replication and reproducibility

- Identify valid reasons why replication and/or reproducibility is not always possible

- Identify the type of reproducibility

- Identify key components to enable reproducible data analyses

Introduction

This lecture will be about reproducible reporting, and I want to take the opportunity to cover some basic concepts and ideas that are related to reproducible reporting, just in case you have not heard about it or don not know what it is.

Before we get to reproducibility, we need to cover a little background with respect to how science works (even if you are not a scientist, this is important). The basic idea is that in science, replication is the most important element of verifying and validating findings. So if you claim that X causes Y, or that Vitamin C improves disease, or that something causes a problem, what happens is that other scientists that are independent of you will try to investigate that same question and see if they come up with a similar result. If lots of different people come up with the same result and replicate the original finding, then we tend to think that the original finding was probably true and that this is a real relationship or real finding.

The ultimate standard in strengthening scientific evidence is replication. The goal is to have independent people to do independent things with different data, different methods, and different laboratories and see if you get the same result. There is a sense that if a relationship in nature is truly there, then it should be robust to having different people discover it in different ways. Replication is particularly important in areas where findings can have big policy impacts or can influence regulatory types of decisions.

What is wrong with replication?

There is really nothing wrong with it. This is what science has been doing for a long time, through hundreds of years. And there is nothing wrong with it today. But the problem is that it is becoming more and more challenging to do replication or to replicate other studies. Here are some reasons:

- Often times studies are much larger and more costly than previously. If you want to do ten versions of the same study, you need ten times as much money and there is not as much money around as there used to be.

- Sometimes it is difficult to replicate a study because if the original study took 20 years to do, it is difficult to wait around another 20 years for replication.

- Some studies are just plain unique, such as studying the impact of a massive earthquake in a very specific location and time. If you are looking at a unique situation in time or a unique population, you cannot readily replicate that situation.

There are a lot of good reasons why you cannot replicate a study. If you cannot replicate a study, is the alternative just to do nothing, just let that study stand by itself?

The idea behind a reproducible reporting is to create a kind of minimum standard (or a middle ground) where we will not be replicating a study, but maybe we can do something in between. The basic problem is that you have the gold standard, which is replication, and then you have the worst standard which is doing nothing. What can we do that’s in between the gold standard and doing nothing? That is where reproducibility comes in. That’s how we can kind of bridge the gap between replication and nothing.

In non-research settings, often full replication is not even the point. Often the goal is to preserve something to the point where anybody in an organization can repeat what you did (for example, after you leave the organization). In this case, reproducibility is key to maintaining the history of a project and making sure that every step along the way is clear.

Summary

Replication, whereby scientific questions are examined and verified independently by different scientists, is the gold standard for scientific validity.

Replication can be difficult and often there are no resources to independently replicate a study.

Reproducibility, whereby data and code are re-analyzed by independent scientists to obtain the same results of the original investigator, is a reasonable minimum standard when replication is not possible.

Reproducibility to the Rescue

Why do we need this kind of middle ground? I have not clearly defined reproducibility yet, but the basic idea is that you need to make the data available for the original study and the computational methods available so that other people can look at your data and run the kind of analysis that you have run, and come to the same findings that you found.

What reproducible reporting is about is a validation of the data analysis. Because you are not collecting independent data using independent methods, it is a little bit more difficult to validate the scientific question itself. But if you can take someone’s data and reproduce their findings, then you can, in some sense, validate the data analysis.

This involves having the data and the code because more likely than not, the analysis will have been done on the computer using some sort of programming language, like R. So you can take their code and their data and reproduce the findings that they come up with. Then you can at least have confidence that you can reproduce the analysis.

Recently, there has been a lot of discussion of reproducibility in the media and in the scientific literature. The journal Science had a special issue on reproducibility and data replication.

Other journals have specific policies to promote reproducibility in manuscripts that are published in their journals. For example, the Journal of American Statistical Association (JASA) requires authors to submit their code and data to reproduce their analyses and a set of Associate Editors of Reproducibility review those materials as part of the review process:

Why does this matter?

Here is an example. In 2012, a feature on the TV show 60 minutes looked at a major incident at Duke University where many results involving a promising cancer test were found to be not reproducible. This led to a number of studies and clinical trials having to be stopped, followed by an investigation which is still ongoing.

[Source on YouTube]

Types of reproducibility

What are the different kinds of reproducible research? Enabling reproducibility can be complicated, but by separating out some of the levels and degrees of reproducibility the problem can become more manageable because we can focus our efforts on what best suits our specific scientific domain. Victoria Stodden (2014), a prominent scholar on this topic, has identified some useful distinctions in reproducible research:

Computational reproducibility: when detailed information is provided about code, software, hardware and implementation details.

Empirical reproducibility: when detailed information is provided about non-computational empirical scientific experiments and observations. In practice this is enabled by making data freely available, as well as details of how the data was collected.

Statistical reproducibility: when detailed information is provided about the choice of statistical tests, model parameters, threshold values, etc. This mostly relates to pre-registration of study design to prevent p-value hacking and other manipulations.

[Source]

Elements of computational reproducibility

What do we need for computational reproducibility? There are a variety of ways to talk about this, but one basic definition that we hae come up with is that there are four things that are required to make results reproducible:

Analytic data. The data that were used for the analysis that was presented should be available for others to access. This is different from the raw data because very often in a data analysis the raw data are not all used for the analysis, but rather some subset is used. It may be interesting to see the raw data but impractical to actually have it. Analytic data is key to examining the data analysis.

Analytic code. The analytic code is the code that was applied to the analytic data to produce the key results. This may be preprocessing code, regression modeling code, or really any other code used to produce the results from the analytic data.

Documentation. Documentation of that code and the data is very important.

Distribution. Finally, there needs to be some standard means of distribution, so all this data in the code is easily accessible.

Summary

Reproducible reporting is about is a validation of the data analysis

There are multiple types of reproducibility

There are four elements to computational reproducibility

“X” to “Computational X”

What is driving this need for a “reproducibility middle ground” between replication and doing nothing? For starters, there are a lot of new technologies on the scene and in many different fields of study including, biology, chemistry and environmental science. These technologies allow us to collect data at a much higher throughput so we end up with these very complex and very high dimensional data sets. These datasets can be collected almost instantaneously compared to even just ten years ago—the technology has allowed us to create huge data sets at essentially the touch of a button. Furthermore, we the computing power to take existing (already huge) databases and merge them into even bigger and bigger databases. Finally, the massive increase in computing power has allowed us to implement more sophisticated and complex analysis routines.

The analyses themselves, the models that we fit and the algorithms that we run, are much much more complicated than they used to be. Having a basic understanding of these algorithms is difficult, even for a sophisticated person, and it is almost impossible to describe these algorithms with words alone. Understanding what someone did in a data analysis now requires looking at code and scrutinizing the computer programs that people used.

The bottom line with all these different trends is that for every field “X”, there is now “Computational X”. There’s computational biology, computational astronomy—whatever it is you want, there is a computational version of it.

Example: machine learning in the life sciences

One example of an area where reproducibility is important comes from research that I have conducted in the area of machine learning in the life sciences.

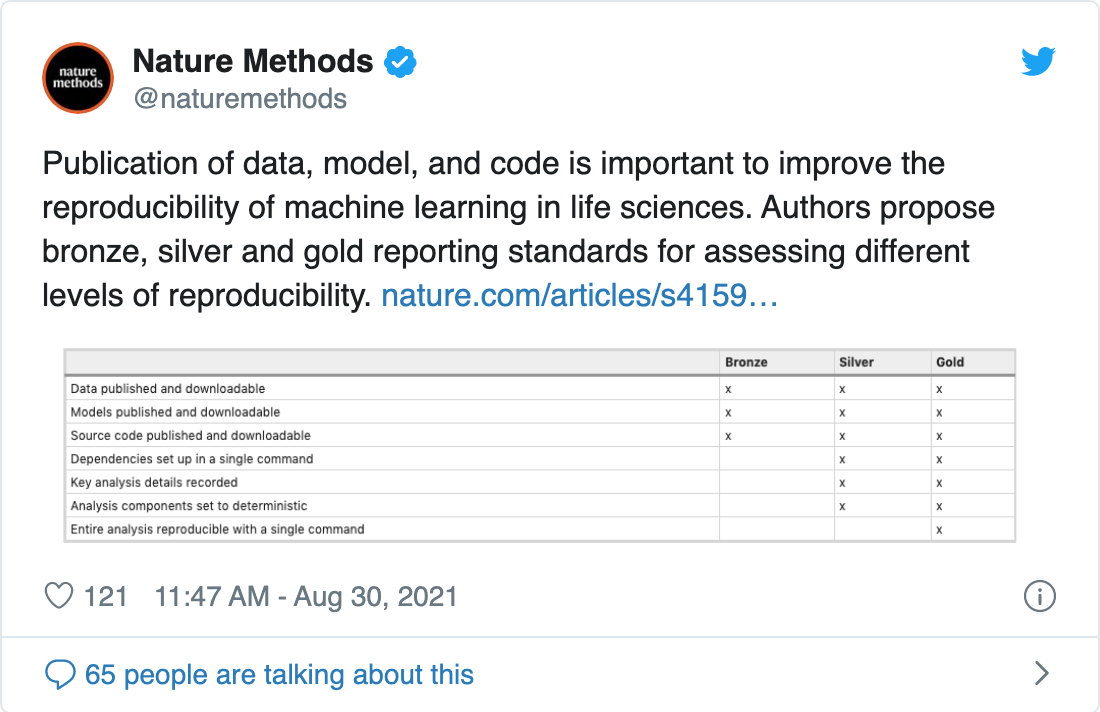

Figure 1: Article in Nature Methods on ‘Reproduciblity standards for machine learning in the life sciences’

[Link to tweet and link to article]

In the above article, computational reproducibility is not throught of as a binary property, but rather it is on a sliding scale that reflects the time needed to reproduce. Published works fall somewhere on this scale, which is bookended by ‘forever’, for a completely irreproducible work, and ‘zero’, for a work where one can automatically repeat the entire analysis with a single keystroke.

As in many cases it is difficult to impose a single standard that divides work into ‘reproducible’ and ‘irreproducible’. Therefore, instead a menu is proposed of three standards with varying degrees of rigor for computational reproducibility:

Bronze standard. The authors make the data, models and code used in the analysis publicly available. The bronze standard is the minimal standard for reproducibility. Without data, models and code, it is not possible to reproduce a work.

Silver standard. In addition to meeting the bronze standard: (1) the dependencies of the analysis can be downloaded and installed in a single command; (2) key details for reproducing the work are documented, including the order in which to run the analysis scripts, the operating system used and system resource requirements; and (3) all random components in the analysis are set to be deterministic. The silver standard is a midway point between minimal availability and full automation. Works that meet this standard will take much less time to reproduce than ones only meeting the bronze standard.

Gold standard. The work meets the silver standard, and the authors make the analysis reproducible with a single command. The gold standard for reproducibility is full automation. When a work meets this standard, it will take little to no effort for a scientist to reproduce it.

The Data Science Pipeline

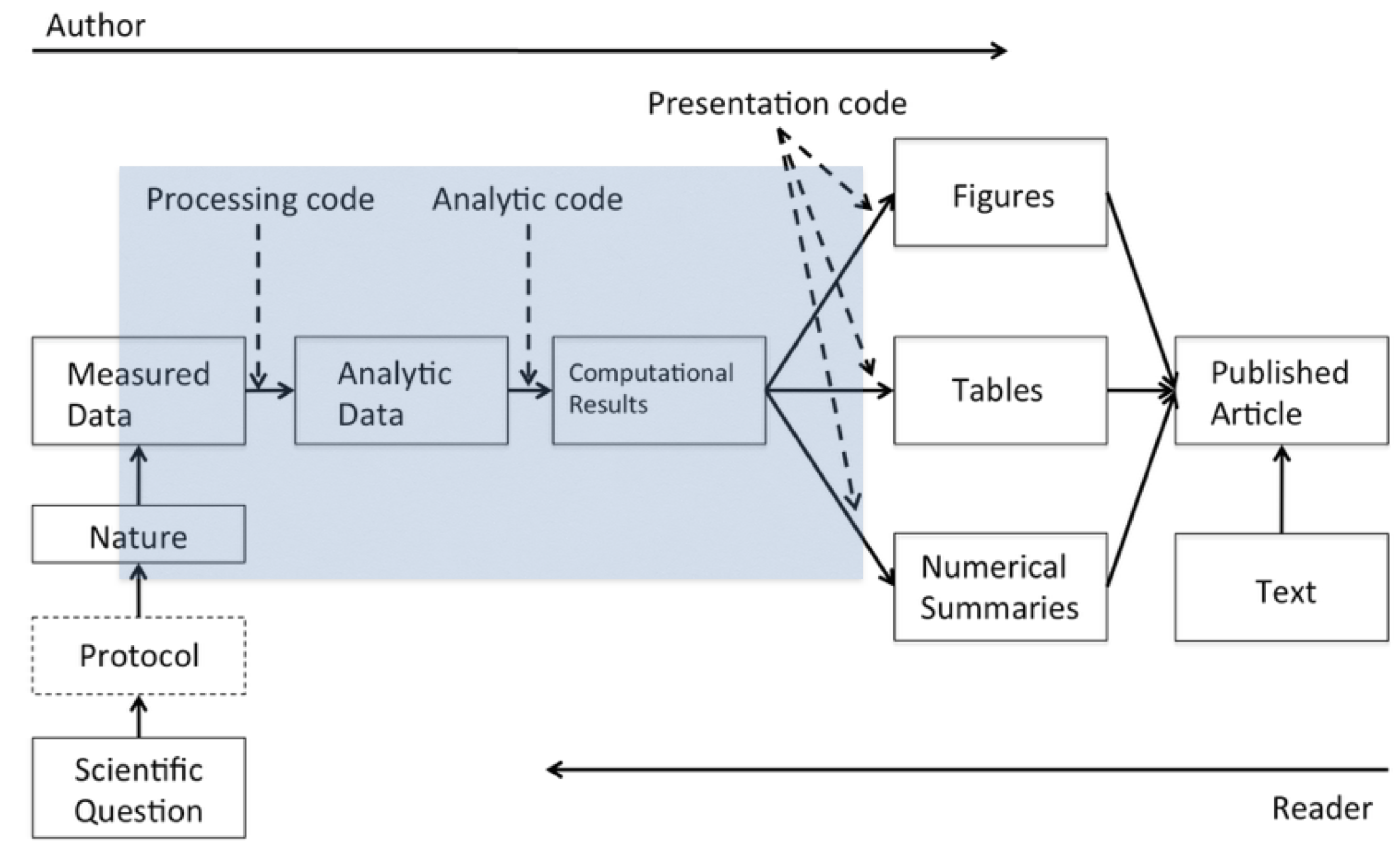

The basic issue is when you read a description of a data analysis, such as in an article or a technical report, for the most part, what you get is the report and nothing else. Of course, everyone knows that behind the scenes there’s a lot that went into this report and that is what I call the data science pipeline.

In this pipeline, there are two “actors”: the author of the report/article and the reader. On the left side, the author is going from left to right along this pipeline. The reader is going from right to left. If you are the reader, you read the article, and you may want to know more about what happened e.g.

- Where are the data?

- What methods were used here?

The basic idea behind computational reproducibility is to focus on the elements in the blue box: the analytic data and the computational results. With computational reproducibility the goal is to allow the author of a report and the reader of that report to “meet in the middle”.

Authors and Readers

It is important to realize that there are multiple players when you talk about reproducibility–there are different types of parties that have different types of interests. There are authors who produce research and they want to make their research reproducible. There are also readers of research and they want to reproduce that work. Everyone needs tools to make their lives easier.

One current challenge is that authors of research have to undergo considerable effort to make their results available to a wide audience. Publishing data and code today is not necessarily a trivial task. Although there are a number of resources available now, that were not available even five years ago, it is still a bit of a challenge to get things out on the web (or at least distributed widely). Resources like GitHub, kipoi, and RPubs and various data repositories have made a big difference, but there is still a ways to go with respect to building up the public reproducibility infrastructure.

Furthermore, even when data and code are available, readers often have to download the data, download the code, and then they have to piece everything together, usually by hand. It’s not always an easy task to put the data and code together. Also, readers may not have the same computational resources that the original authors did. If the original authors used an enormous computing cluster, for example, to do their analysis, the readers may not have that same enormous computing cluster at their disposal. It may be difficult for readers to reproduce the same results.

Generally, the toolbox for doing reproducible research is small, although it’s definitely growing. In practice, authors often just throw things up on the web. There are journals and supplementary materials, but they are famously disorganized. There are only a few central databases that authors can take advantage of to post their data and make it available. So if you are working in a field that has a central database that everyone uses, that is great. If you are not, then you have to assemble your own resources.

Summary

The process of conducting and disseminating research can be depicted as a “data science pipeline”

Readers and consumers of data science research are typically not privy to the details of the data science pipeline

One view of reproducibility is that it gives research consumers partial access to the raw pipeline elements.

Post-lecture materials

Final Questions

Here are some post-lecture questions to help you think about the material discussed.

Questions:

What is the difference between replication and reproducible?

Why can replication be difficult to achieve? Why is reproducibility a reasonable minimum standard when replication is not possible?

What is needed to reproduce the results of a data analysis?